The promises and pitfalls of vibe coding

September 2025

Mark Zuckerberg’s well-known approach to innovation at Facebook was encapsulated in his phrase “Move fast and break things.” In a 2009 Business Insider interview, he stated, “Unless you are breaking stuff, you are not moving fast enough” – a philosophy that has gone on to influence the innovation strategies of many tech companies ever since.

In 2025, that phrase feels woefully irresponsible – particularly as we see digital technologies advance at unprecedented speed, often causing real-world harm for citizens and the planet.

It is often claimed that Artificial Intelligence (AI) will be the biggest disruptor the world has seen since the Industrial Revolution. A multi-faceted technological colossus set to reshape every corner of society and business, yet still largely a mystery to most.

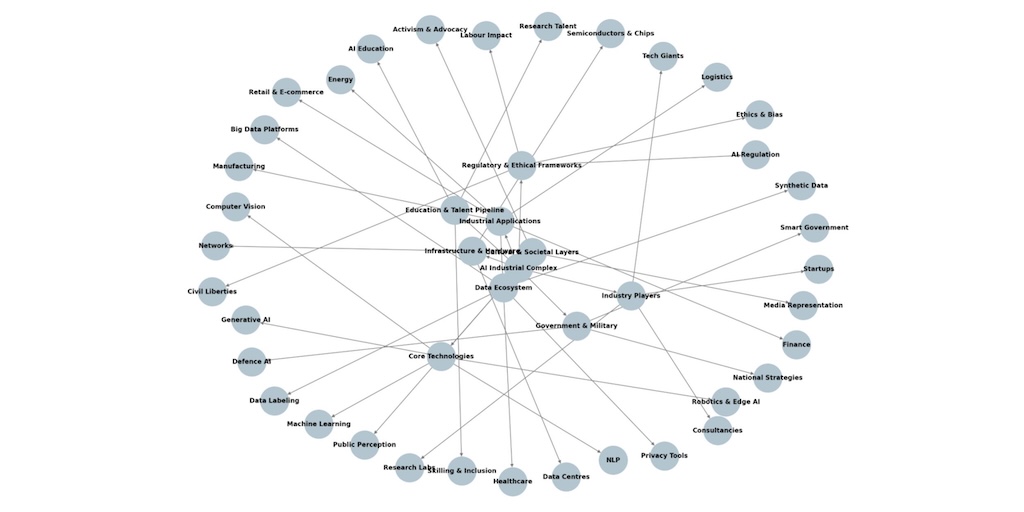

Experimental sketch of the ecosystem – showing complexity.

Investment into the AI industrial complex is staggering. There is a global race for AI superpower status, with countries such as Saudi Arabia, the UK and France squaring up to the US and China, to be a major force. And guess what, people are moving fast and breaking things; massive data centres are being built which consume enormous amounts of energy, algorithms are being trained on biased data, and the threat of an employment crisis is very real – with low-wage workers and women set to be disadvantaged the most.

Globally, governments are actively investing in AI to streamline public services, improve efficiency and to better serve citizens. However, AI readiness and the ability, or inability, of countries to adopt and regulate these technologies will also shape international competitiveness, equity between citizens and countries, ethical standards and future governance.

While its past, current and potential impact is alarming, the future of AI is not predetermined. It takes many human decisions to set the course and decide how things evolve and who sets to benefit; but ethical governance is needed to develop ethical policies and procedures.

In the UK, the Government published its AI Opportunities Action Plan in January, outlining plans to maximise AI’s potential to drive growth and benefit people across the UK – including advocating for its use in the public sector. The plan notes the potential for AI to radically change public services by automating routine tasks, making services quicker and more efficient, and making better use of Government data to target support at those that need it.

Both National and Local Government bodies are being encouraged to adopt AI within their services and processes. Photo: Bridgend District Council.

“But it also brings with it risks that must be managed to effectively support adoption and maintain public trust,” the plan acknowledges, citing fairness, safety and privacy. Indeed, it was a major point of discussion at the Digital Leaders Public Sector Insights AI Week in March, where I gave a presentation on digital innovation in uncertain, complex and emergency environments.

With so much at stake, it has arguably never been more important not to move fast and break things. But we can still move quickly – carefully. With that in mind, this article will reflect on the debate, while drawing on guidance, toolkits and principles to highlight the importance of care-ful innovation and good governance.

The need to arm ourselves with knowledge was a key takeaway from the Digital Leaders event, and one that underpinned my presentation.

The ever-changing narrative around AI and its sociotechnical impacts can lead to feelings of overwhelm; many of us feel daunted by the intensification of automation across all sectors, organisations and individual practices – whether you are working in it or observing from afar.

AI chatbots and automation tools are increasingly being pushed on citizens within digital workspaces and personal communication – Microsoft, Google Gemini, WhatsApp – often without explicit consent. It has become our individual responsibility to improve our knowledge and understanding of these tools and technologies; to think critically, challenge hype and, fundamentally, not accept a tech-determinist roadmap from tech companies at the helm of AI – many of whom, arguably, will be thinking about the financial bottom line and power that comes with it.

Thankfully, many responsible leaders are advocating for tools to better support the public procurement of AI. One such person is Professor Alan Brown of Exeter University, who is also AI director at Digital Leaders and held a fellowship at the Alan Turing Institute.

Discussing how to find the balance in digital power dynamics, Brown says we must first acknowledge the political dimensions of AI-based technologies and not pretend they are neutral tools: “When evaluating new AI technologies, my personal approach is to focus not just on defining ROI and efficiency goals, but also describing their governance implications: Who gains authority and who loses it? What values are encoded in this system? What dependencies are we creating? Whose interests are prioritized by default?”

These are all critical questions that need answering truthfully and thoughtfully.

Albert Sanchez-Graells, Professor of Economic Law and Co-Director of the Centre for Global Law and Innovation (University of Bristol Law School), meanwhile, recognises another problem: there is a gap in public sector digital skills preventing the public buyer from adequately understanding the technologies it seeks to buy. Therefore, “the public buyer risks procuring AI it does not understand, which is already a widespread phenomenon in the private sector.”

It doesn’t help that there is so much noise, marketing hype and complexity surrounding it all. On my own quest for clarity, I have come across some useful resources, which I have rounded up below to help others feel less overwhelmed and more informed.

Representing a significant stepchange in responsible AI adoption, the UK Government published a playbook for AI in February. The playbook – designed specifically to offer public sector organisations to use AI safely, effectively and securely – includes 10 principles that should be upheld when using AI. These range from understanding the capabilities and limitations of AI, to working with commercial colleagues from the outset, to simply having the skills and expertise needed to implement AI solutions.

Again, Professor Alan Brown has published an in-depth analysis of the playbook, which I would highly recommend reading. According to Brown, while the principles reveal a thoughtful approach to balancing innovation with responsibility, there are additional considerations in the context of digital transformation at large, complex organisations – for instance, integration with legacy systems and cross-department coordination.

Signalling a national commitment to responsible AI use, similar guidance has been produced in Wales and Scotland. The Welsh version of the guidance includes a range of examples of successful uses of AI in the public sector – from helping to diagnose cancer to creating a ‘lost woodland’ dataset – while the Scottish AI Playbook includes a case study about how AI-automated image cropping helped estimate heat loss in homes.

These examples demonstrate the wide-ranging impact and potential of AI, and the importance of ensuring the public sector is equipped with the skills, knowledge and confidence to use it in a way that mitigates risk and maximises positive impact for people and planet.

A major part of the AI education piece is around data and privacy, with cyber security risks intensifying as businesses embed AI into various operations.

According to the National Cyber Security Centre, some of the most dangerous flaws in AI systems are ‘AI hallucination’ (producing incorrect statements), being biased or gullible, creating toxic content and ‘data poisoning’ (susceptibility to being corrupted by manipulating data it is trained on).

These are serious issues that have serious consequences, so it is paramount that tools are developed ethically and security integrated into AI projects and workflows from the outset.

Another significant milestone this year came with the launch of the UK’s Code of Practice for Cyber Security of AI’, which addresses cyber security risks to AI. The Information Commissioner’s Office has also designed an AI toolkit for those seeking to better understand best practice in data protection-compliant AI – to “reduce risk to individuals’ rights and freedoms” caused by their own AI systems.

There are plenty of other specialised toolkits elsewhere, detailing how to harness opportunities in areas such as digital accessibility and civic AI. This toolkit, for example, gives advice on how civil society organisations and local authorities can empower communities to address the climate crisis. These are matters that have a critical real-world impact and which require innovation with care.

There is no fixed position on AI; it is shifting sands so it is crucial to ask questions and get informed – particularly when employing it in the public sector, where the risk of causing harm is significant.

It is a challenge, but with the right collaboration, partnerships and care, it is possible to deliver digital innovation with demonstrable positive impact. In practice, it requires policymakers to implement robust data privacy and transparency regulations, ensure that labour laws protect fair treatment of workers, and embed diversity and inclusion into government funding frameworks.

It’s a challenge that Calvium is well-prepared to address, as proud members of the Digital Leaders AI Expert platform. As proponents of tech as a force for good, we combine data ethics with ISO and Cyber Essentials Plus accreditation, and clearance for government contracts. This means we help clients adopt AI responsibly, considering impacts at every stage and ensuring innovation is both safe and valuable.